- #Torch nn sequential get layers how to#

- #Torch nn sequential get layers code#

- #Torch nn sequential get layers series#

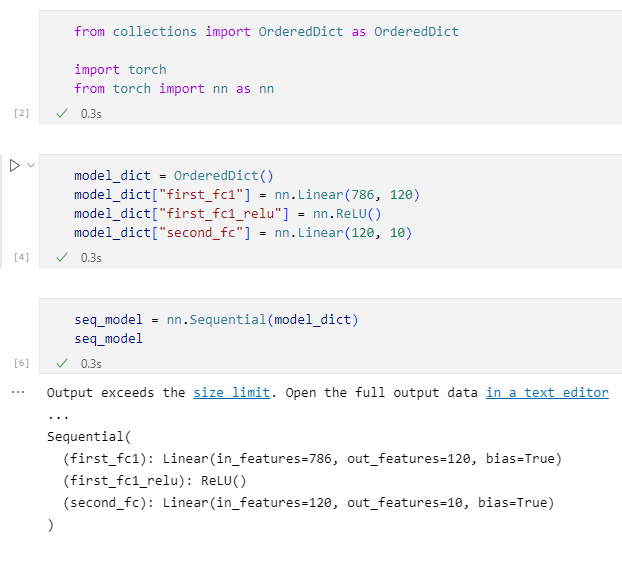

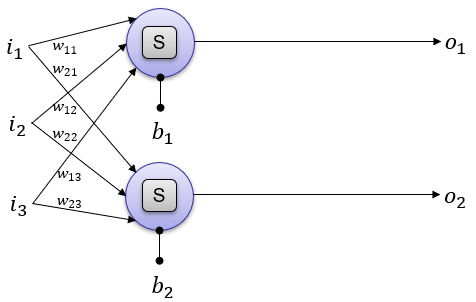

For example, a dropout / Batch Norm layer behaves differently during training and inference. In these cases, we would prefer to use the nn.Module objects where we have weights or other states which might define the behaviour of the layer. This would really ease up our job, as we don't have to worry about stateful variables existing outside of the function. Or just to beat the hassle, we could just define a class to hold the data structure, and make convolutional operation as an member function. And then, make this external data structure an input to our function. For us to implement a function that does the convolutional operation, we would also need to define a data structure to hold the weights of the layer separately from the function itself. This means that the layer has a state which changes as we train. I now want to you to stress upon that fact that the data held by the convolutional layer changes. It also needs to hold data, which changes as we train our network. Therefore, from a programmatic angle, a layer is more than function. So, it makes sense for us to just implement it as a function right? But wait, the layer holds weights which need to be stored and updated while we are training. For example, a convolutional operation is just a bunch of multiplication and addition operations. Normally, any layer can be seen as a function. So how do we choose what to use when? When the layer / activation / loss we are implementing has a loss. For example, in order to rescale an image tensor, you call torch.nn.functional.interpolate on an image tensor. On the other hand, nn.functional provides some layers / activations in form of functions that can be directly called on the input rather than defining the an object. This is a Object Oriented way of doing things. The way it works is you first define an nn.Module object, and then invoke it's forward method to run it. Which one to use? Which one is better?Īs we had covered in Part 2, torch.nn.Module is basically the cornerstone of PyTorch.

In PyTorch, layers are often implemented as either one of torch.nn.Module objects or torch.nn.Functional functions. This is something that comes quite a lot especially when you are reading open source code.

#Torch nn sequential get layers code#

You can get all the code in this post, (and other posts as well) in the Github repo here. Memory Management and Using Multiple GPUs.Understanding Graphs, Automatic Differentiation and Autograd.

#Torch nn sequential get layers how to#

#Torch nn sequential get layers series#

Hello readers, this is yet another post in a series we are doing PyTorch.

0 kommentar(er)

0 kommentar(er)